Dirty Secrets of the BookCorpus Dataset

A closer look at BookCorpus, the text dataset that helps train large language models for Google, OpenAI, Amazon, and others

Cover Photo by Javier Quiroga on Unsplash

BookCorpus has helped train at least thirty influential language models (including Google’s BERT, OpenAI’s GPT, and Amazon’s Bort), according to HuggingFace.

But what exactly is inside BookCorpus?

This is the research question that Nicholas Vincent and I ask in a new working paper that attempts to address some of the “documentation debt” in machine learning research — a concept discussed by Dr. Emily M. Bender and Dr. Timnit Gebru et al. in their Stochastic Parrots paper.

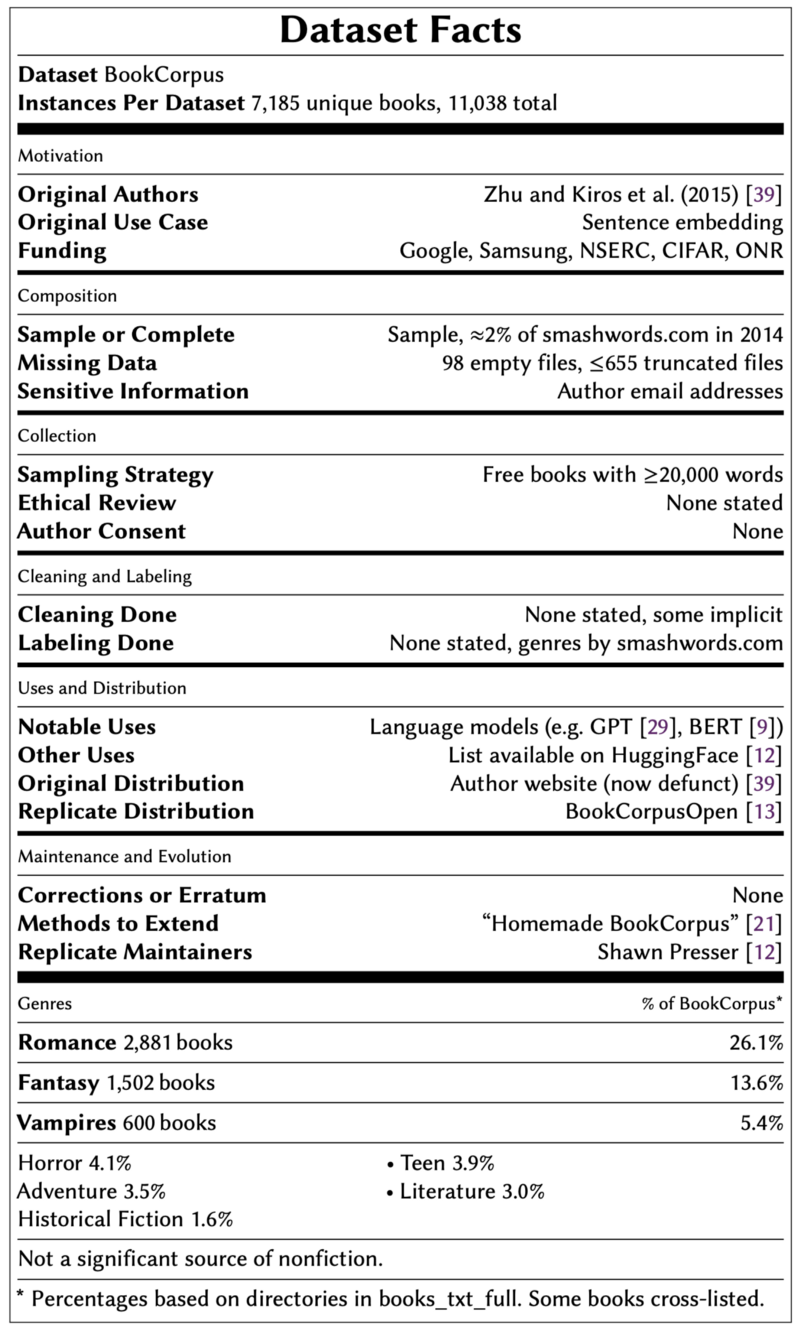

While many researchers have used BookCorpus since it was first introduced, documentation remains sparse. The original paper that introduced the dataset described it as a “corpus of 11,038 books from the web,” and provided six summary statistics (74 Million sentences, 984 Million words, etc.).

We decided to take a closer look — here is what we found.

General Notes

For context, it is first important to note that BookCorpus contains a sample of books from Smashwords.com, a website that describes itself as “the world’s largest distributor of indie ebooks.”

As of 2014, Smashwords hosted 336,400 books. For comparison, in the same year, the Library of Congress housed a total of 23,592,066 catalogued books (about seventy times as many).

The researchers who collected BookCorpus downloaded every free book longer than 20,000 words, which resulted in 11,038 books — a 3% sample of all books on Smashwords.com. But as discussed below, we found that thousands of these books were duplicates and only 7,185 were unique, so really BookCorpus is only a 2% sample of all books on Smashwords.

In the full datasheet, we provide information about funding (Google and Samsung were among the funding sources), the original use case for BookCorpus (sentence embedding), as well as other details outlined in the datasheet standard. For this blog post, I will highlight some of the more concerning findings.

🚨 Major Concerns

🙅🏻 Copyright Violations

In 2016, Richard Lea explained in The Guardian that Google did not seek consent from authors in BookCorpus, whose books help power Google’s technologies.

Going even further, we find evidence that BookCorpus directly violated copyright restrictions for hundreds of books that should not have been redistributed through a free dataset. For example, over 200 books in BookCorpus explicitly state that they “may not be reproduced, copied and distributed for commercial or non-commercial purposes.”

We also find that at least 406 books included in the free BookCorpus dataset now cost money on Smashwords, the dataset’s source. To purchase these 406 books would cost $1,182.21 as of April 2021.

📕📕 Duplicate Books

BookCorpus is often described as containing 11,038 books, which is what the original authors report. However, we found that thousands of books were duplicates, and in fact only 7,185 books in the dataset are unique. The exact breakdown is as follows:

- 4,255 books occurred once (i.e. were not duplicated)

- 2,101 books occurred twice 📕📕

- 741 books occurred thrice 📗📗📗

- 82 books occurred four times 📘📘📘📘

- 6 books occurred five times 📙📙📙📙📙

📊 Skewed Genre Representation

Compared to a new version called BookCorpusOpen, and another dataset of all the books on Smashwords (Smashwords21), the original BookCorpus has some significant genre skews. Here is a table with all the details:

Notably, BookCorpus over-represents the Romance genre, which is not necessarily surprising given broader patterns in self-publishing (authors consistently find that romance novels are in high demand). It also contains quite a few books in the Vampires genre, which may have been phased out given that no vampire books appear in Smashwords21.

In and of itself, skewed representation can lead to issues when training large language models. But as we looked at some “romance” novels, it became clear that some books pose further concerns.

⚠️ Potential Concerns that Need Further Attention

🔞 Problematic Content

While there is more work to be done in determining the extent of problematic content in BookCorpus, our analysis shows that it definitely exists. Consider, for example, one novel in BookCorpus called The Cop And The Girl From The Coffee Shop.

The book’s preamble clearly states that “the material in this book is intended for ages 18+.” On Smashwords, the book’s tags include “alpha male” and “submissive female.”

While there may be little harm from informed adults reading a book like this, feeding it as training material to language models would contribute to well-documented gender discrimination in these technologies.

🛐 Potentially Skewed Religious Representation

When it comes to discrimination, the recently-introduced BOLD framework also suggests looking at seven of the most common religions in the world: Sikhism, Judaism, Islam, Hinduism, Christianity, Buddhism, and Atheism.

While we do not yet have the appropriate metadata to fully analyze religious representation in BookCorpus, we did find that BookCorpusOpen and Smashwords21 exhibit skews, suggesting that this could also be an issue in the original BookCorpus dataset. Here is the breakdown:

More work is needed to clarify religious representation in the original version of BookCorpus, however, BookCorpus does use the same source as BookCorpusOpen and Smashwords21, so similar skews are likely.

⚖️ Lopsided Author Contributions

Another potential issue is lopsided author contributions. Again, we do not yet have all the metadata we would need for a complete analysis of BookCorpus, but we can make estimates based on our Smashwords21 dataset.

In Smashwords21, we found that author contributions were quite lopsided, with the top 10% of authors contributing 59% of all words in the dataset. Word contributions roughly follow the Pareto principle (i.e. the 80/20 rule), with the top 20% of authors contributing 75% of all words.

Similarly, in terms of book contributions, the top 10% of authors contributed 43% of all books. We even found some “super-authors,” like Kenneth Kee, who has published over 800 books.

If BookCorpus looks at all similar to Smashwords.com as a whole, then a majority of books in the dataset were probably written by a minority of authors. In many contexts, researchers may want to account for these lopsided contributions when using the dataset.

What’s Next?

With new NeurIPS standards for dataset documentation and even a whole new track devoted to datasets, hopefully the need for retrospective documentation efforts (like the one presented here) will decline.

In the meantime, efforts like this one can help us understand and improve the datasets that power machine learning.

If you want to learn more, you can check out our code and data here, and the full paper here, which includes the following “data card” summarizing our findings:

If you have questions/comments, head over to the Github discussion! You can also reach out directly to Nick or myself. Thanks for reading to the end 🙂